The sale and advertisement of illicit drugs to young people on social media is a known problem. Class A drugs can be easily found in just a few swipes on Instagram, TikTok, or Snapchat.

In 2019, Volteface carried out the first piece of research on this topic in the UK. Their report, DM for Details, found that 1 in 4 young people had already seen illicit drug advertisements on social media, making them feel that it was normal to see drugs advertised on these platforms. These results are concerning given the recent increase of drug consumption among young people and the prevalence of online platforms in their digital lives.

Following Volteface’s lead, academia has since joined in on researching this emerging phenomenon. To understand the current state of academic research on the sale and advertisement of drugs on social media, we conducted a multidisciplinary scoping review. This process means that we that we searched for everything written on the topic and then summarised the findings in our review.

This research was then used to inform a call for evidence by Ofcom as appointed regulator for the Online Safety Bill.

Algorithms are becoming smarter, so what?

Across the studies reviewed, we found on average that 13 in 100 posts on social media were advertising illegal drugs. It is difficult to say if this number is high or low; but one thing that was striking was that studies failed to measure exposure rates (how many times a post was seen rather how many times it existed).

An important change we observed is that illicit drug detection algorithms are becoming smarter. Instead of just using drug-related keywords, they are now detecting drug selling behaviour on social media, using various input sources such as comments, emojis or pictures (see the image below). Smarter algorithms matter because they can rapidly identify and remove large quantities of harmful content online before anyone can see it.

Figure 1. Example of a machine learning algorithm using various sources of input data such as images, comments, bio information and user images to complement a list of drug-related hashtags which inform the algorithms search for identifying illicit drug posts.

Figure 1. Example of a machine learning algorithm using various sources of input data such as images, comments, bio information and user images to complement a list of drug-related hashtags which inform the algorithms search for identifying illicit drug posts.

Another problem with all this research was that most of these studies focused only on Twitter and Instagram. Popular apps frequented by adolescents such as TikTok were rarely studied. This means that we don’t know if detection algorithms in understudied platforms are improving at the same rate.

We might be seeing only the tip of the iceberg

There is a huge gap in the way research on this topic is distributed differently across social media platforms. This is a significant finding: platforms that may not have previously been thought of as social media are becoming relevant to advertise and sell illicit drugs.

Anecdotal evidence shows that gaming platforms such as Twitch or Roblox, apps that sell clothes such as Depop, or e-commerce websites such as eBay or Craigslist are harbouring illicit drug advertisements.

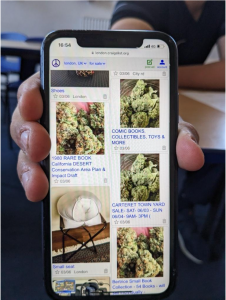

Figure 2. Student showing on his phone front the page of Craigslist where cannabis advertisements are listed as furniture.

We might be underestimating the prevalence of drugs on social media. We need to conduct research across various platforms used by young people – but that means also understanding what platforms young people are using.

So, is it only a question of looking at greater numbers of platforms to solve this problem? Well no. The nature of these advertisements is changing.

When illicit drugs are marketed as healthy, glamourous and seemingly ‘safe’ products

Our review highlights a trend where illicit drug promotional content is changing to appeal to young people. Illicit drugs are being portrayed as healthy and glamourous products.

Studies revealed that drugs are being marketed differently to target more specific audiences within young people. For example, cannabis or body enhancing drugs are now associated with “wellness” and “healthy lifestyles”. Study drugs (prescription stimulants) are also being framed in a culture of achievement and success through memes and funny content.

The influencer and social media era bring new challenges: products within the boundaries of “legal concentrations” or marketed as “safe” are increasing on social media. There are no indications on whether these substances are actually what they portray to be.

Young people don’t feel compelled to report

In parallel to our review, we conducted a focus group with a class of Year 12 students.

Students from the focus group reported seeing the most drugs explicitly advertised on Snapchat through their contacts. They recognised the difficulty in regulating this platform given the private messaging function of the application. In contrast, more subtle but ‘funny’ content relating to other users consuming or doing tricks were seen rather seen on Instagram and TikTok.

One of the most surprising results was that young people did not feel compelled to report illicit drug content on social media. When asked if any of the students had reported anything harmful or illegal they had seen on social media, all answered no. All students within the focus group did not think other young people would do so either.

There is a mismatch between the attitudes and behaviour of young people. On one hand they are aware that the presence of this content on social media is an issue and disclosed feeling uncomfortable when seeing this content. On the other hand, the presence of drugs on social media seemed to have normalised this type of posts: “most people are just so used to seeing it that it doesn’t really bother them so much.”

Seeing is not enough to act. As one student put it: “It doesn’t affect you enough to make you wanna report”. This feeling was accompanied by perceptions that reporting wouldn’t make a difference because it was an ineffective practice.

And now what? We need better data

Since DM for Details and the publication of our review, the Online Safety Bill has now become part of legislation (the Online Safety Act). Social media companies are required by law to proactively detect and remove such content from their platforms.

But accurately detecting this content in the first place is a problem. While algorithms are indeed becoming better at detecting posts about illicit drugs, they are inflexible. Ironically, harm reduction content produced by professional evidence-based research bodies and charities may be caught in the crossfire. Educative posts aimed to reduce harmful drug consumption are being taken down as the algorithms aren’t sensitive enough to distinguish these differences.

In addition, the sale of drugs online is adaptive, with offenders able to now evade the common detection algorithms to continue their advertisement. Alongside all of this, young people don’t report such content because it is normalised and not even considered as an issue.

To sum up. Detection methods are becoming smarter, but not smart enough. Illicit drug advertisements are not being caught in time, while drug harm reduction content is being taken down by mistake. This has exacerbated the presence of drugs on social media to the point of normalcy, just as any other marketable product.

The rapid evolution of drug advertisements brings us to the following questions:

- How do these changes reflect how young people perceive and interact with this content?

- How do we encourage young people to report illicit drug advertisements?

- How can social media platforms effectively detect these ads while not mistakenly censoring harm reduction content?

To answer these, we need better data. And it needs to come from young people themselves.

Social media companies need to know what young people are seeing on their platforms to effectively train their algorithms to recognise such content. Practitioners and regulators need to know which drugs are being the most advertised, and harm reduction professionals need to know if their prevention messages are being seen.

First UK national survey on drugs on social media: how to get involved

To gather this data, we are conducting a national survey of UK students aged 13 to 18 in collaboration with drug education charities the Daniel Spargo-Mabbs Foundation and The Alcohol Education Trust. This is the first ever large-scale survey on this topic in the UK.

We are working directly with three main social media providers (Meta, Snap and TikTok) as well as government and Ofcom who are making important decisions about young people’s safety online on an ongoing basis.

We would like to extend our invitation to all schools and teachers interested to participate in this important research. If you would like your school or class to participate or know any relevant person working in a secondary school setting that could be interested in this, please click here. All the relevant information, instruction and link to the survey can be found there.

The more responses we can get, the more valuable the data will and the more difference it can make. It is also a great activity for a PSHE lesson or form time.

The findings of this survey will be shared with participating schools, stakeholders including law enforcement, government, social media companies and charities. Our aim is to provide evidence to all relevant actors to address this problem. This research may prompt further evaluation of proactive detection techniques and online safety prevention campaigns.

It is vital to understand how this issue is affecting young people in the UK to create effective solutions to prevent it.

Ashly Fuller is a PhD researcher at the Department for Security and Crime Science and at UCL. She investigates how to address and prevent the advertisement and sale of illicit drugs on social media to young people. You can reach out to her at ashly.fuller.16@ucl.ac.uk. X @FullerAshly